New ways to optimize shared datasets in Power BI (1/2) The offline approach

- Brunner_BI

- 21. Feb. 2024

- 6 Min. Lesezeit

Aktualisiert: 20. Jan. 2025

This series has two parts:

The dataset can be downloaded (or we have the .pbix) and we have all reports. We can use the free version of Measure Killer to optimize our model. Read below to get started

The dataset cannot be download or I do not have all connected reports. We need paid external tools for optimization, part 2 can be read here.

For part 2 it is showing Git-integration which is optional for Measure Killer.

As we all know, shared semantic models are a central piece of every BI architecture.

They provide reusable data and a single source of truth for all report developers in our organization.

We invest a lot of time in building them and follow best practices to make them as performant and as small as possible.

However, over time, we might, there are problems:

Problem 1) Our shared models are getting bigger and bigger

-Horizontally (we are asked to add fields, more columns or whole tables)

-Vertically (every day we might get more data and we may not remove old data via incremental refresh policies)

My claim is that all shared datasets are already oversized when deployed. By that I mean that whatever we built it for, some of it will never be queried. It is just impossible to tell in advance.

Problem 2) People have built many reports on top of our dataset (maybe even across workspaces and in dev/test/prod environments)

If we allow developers to build reports in other workspaces and many people have access to them, it can get messy with the data lineage.

We might see this when opening the Power BI impact analysis of the dataset.

There have been 6 reports built on top of our dataset in workspaces where we do not have access (this also applies to Tenant Admins unless they specifically gave themselves access to those workspaces but it is not so straight forward to find out)

Here is a full view of the impact analysis of our shared dataset

So what are we going to do about these problems?

Solution

We can leverage external tools to get the full lineage of our datasets and optimize them. I am especially talking about removing columns and measures nobody needs.

Meaning we will be able to identify what columns/measures we can remove because they are not used in the model or any connected reports.

Here are two scenarios of how our setup can look like:

The dataset can be downloaded (or we have the .pbix) and we have all reports.

The dataset cannot be download or I do not have all connected reports.

Follow this link to see the article for scenario 2

This article only focuses on scenario 1, for scenario 2, where all files are in the Power BI Service, please follow this link.

Scenario 1)

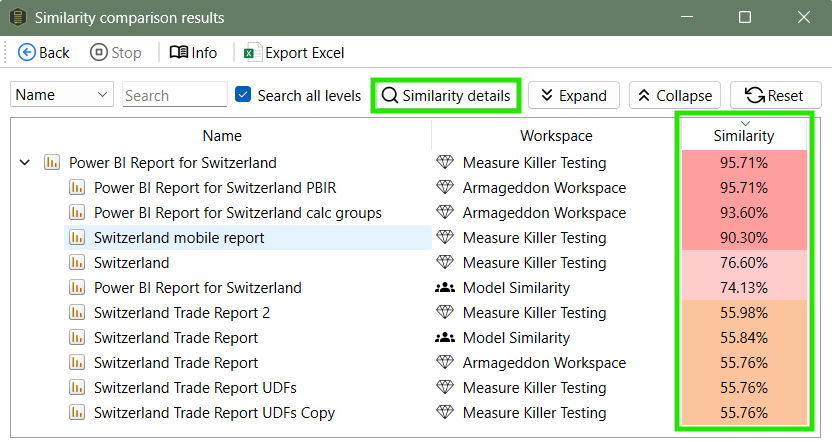

In this case, we can leverage the free version of Power BI external tool "Measure Killer" to analyze our model and all reports. We just need to put everything manually into the tool. Let me show you how:

This is part of the free version of the tool and is exactly what we need.

First I get a warning that I do not have any files open currently. I need to open the dataset file in Power BI Desktop to proceed.

After I click on refresh ports, I can see that my file was correctly identified.

It is now asking if I want to add the pages of the dataset. This means that whatever is used on any page of the dataset will also be taken into account for the analysis.

I click on yes and then need to select the file again.

After clicking on next, the main window will appear.

Now I can add my reports (thin files) that are connected to the dataset in the Power BI Service. I need to have these files on my machine and can drag and drop them into the box on the left.

Now I can see the reports and the dataset in the list.

After clicking on run, it will do its magic.

As you can see, I switched to dark mode.

We get a summary at the left bottom where we can see at one glance, how much of the size of our data model is used anywhere.

Anywhere? Yes, besides some very specific limitations you can read here, it checks if something is used in the model (relationships, column sorting, measures, calculated columns etc.) as well as in the report (visuals, filters, conditional formatting etc.) and it also checks your Power Query code.

The promise of the tool is that you can remove the unused columns and measures without anything breaking down in any report.

Now we only want to clean up our model quickly and do not care about the details, so let us go to "Kill measures and calculated columns".

We will just go ahead and click on "Kill all". Now it will write to the Power BI file we have open currently and remove the measures and calculated columns directly.

Always try to keep backups, if you did not, you will still have the "Restore" function where Measure Killer keeps backups of everything it ever removed (the backups can also be synced in a OneDrive folder).

We will get a summary of what is has removed and potentiall we will see this:

Those were the artifacts flagged yellow in the previous output. Since "Age" and "types" are not measures or calculated columns they will not appear in this window but will be part of the "Kill columns" window.

For "Kill columns" we get an M code we can copy paste into Power Query.

The dropdown on top shows all tables of my dataset that contain unused columns, the list is sorted by the total size I can free up.

When I paste the M code into Power Query's Advanced Editor (of the 'people' query in my case) and replace the old code that way, it takes the old query and just adds one step at the end where it removes the unused columns.

I can see the effect when I click on "done".

This is a safe way to remove unused columns because I can always either remove the step generated by the tool or if I selected "Remove other columns" in the Measure Killer window, I can click on the little wheel icon on the very right in "Applied steps" and change the columns I want to keep.

After doing this for every query/table that Measure Killer suggested, we can click on "Close & apply" in Power Query to finish removing our unused columns.

After our data has completed loading, we can run Measure Killer again.

We have successfully reached 100% used, which means everything that is taking up any serious storage in our model is needed somewhere.

We still have some rows that are red, those are report-level measures that are actually part of the thin files (live reports) and not the data model. So it is okay to leave them in - Measure Killer cannot remove them.

Now as a little trick I want to make you aware that even if something is only used once, we might be able to remove it. This could be if all columns in a table but one are unused. Now if that one column that is used is only used in a relationship we might be able to kick it and the whole table out. So it might be worth going through the most costly artifacts that are only used once.

Another case that might apply: if something is only used on a hidden page and the page is only used for testing. Maybe you can remove something there as well!

This concludes part 1 of this series, see part 2 to find out how we can optimize the same model if it is stuck in the Power BI Service (we cannot download it) or we do not have all connected reports or are unsure in which workspaces they are. For part 2 we will have to harness the paid version of Measure Killer.

Git-integration helps us keep a version history of our model before Measure Killer optimizes it. If we do not have Git-integration we need to somehow keep a version of the model as a backup, Measure Killer will not back anything up in this case.

Kommentare